Helldivers 2’s New ‘Slim’ Version Saves 131GB of Space on Your Drive

Key Takeaways

- Helldivers 2 dramatically reduced its PC installation size by 131GB (from 154GB to 23GB) through aggressive data deduplication.

- The game’s original massive footprint was due to a data duplication strategy for HDD load times, which proved largely ineffective as level generation was the primary performance bottleneck.

- This optimization provides critical insights for businesses, demonstrating significant potential for cost savings in storage and bandwidth, enhanced software development, and improved operational efficiency.

- Key strategies for businesses include data deduplication, compression, asset/code refactoring, tiered storage management, and database optimization, with AI poised to revolutionize future optimization efforts.

- Beyond performance and cost, a lean digital footprint indirectly offers benefits for cybersecurity and operational agility by reducing attack surfaces and enabling faster security updates.

Table of Contents

-

- The Genesis of a Gigantic Footprint: What Led to 154GB?

- The ‘Slim’ Revolution: Deduplication and Its Impact

- Beyond Gaming: The Business Imperative of Data Efficiency

- Optimizing Your Digital Footprint: Key Strategies for Businesses

- Cybersecurity and Agility: An Indirect Benefit

- The Path Forward: A Call for Continuous Optimization

- FAQ Section

- Conclusion

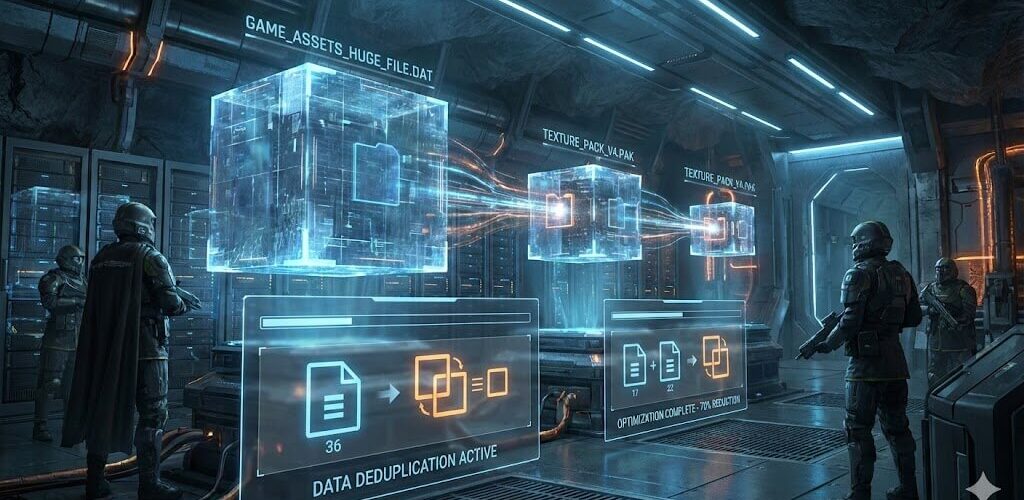

In an era defined by accelerating digital transformation and ever-increasing data demands, the efficiency of our software and the management of our digital assets have become paramount. This holds true not just for mission-critical enterprise applications but also for the entertainment sector, as evidenced by a recent breakthrough in game development. The popular cooperative shooter, Helldivers 2, has undergone a significant optimization, with its developers successfully implementing a “slim” version that dramatically reduces its PC installation size by a staggering 131GB. This achievement, cutting the game’s footprint from approximately 154GB down to a lean 23GB, offers a compelling case study into the power of data deduplication and efficient software engineering—lessons that resonate far beyond the gaming world and hold profound implications for businesses striving for operational excellence and cost savings in their digital infrastructure.

The Genesis of a Gigantic Footprint: What Led to 154GB?

To truly appreciate the magnitude of Helldivers 2‘s optimization, it’s essential to understand the technical rationale behind its original, much larger file size. Initially, the game commanded a substantial 154GB on PC hard drives. This considerable bulk was not merely due to high-resolution textures or expansive game worlds but was a deliberate engineering decision aimed at enhancing performance for a specific subset of players. The developers had opted for a strategy of “data duplication.” This involved storing multiple copies of certain game assets—textures, models, audio files—across the game’s directory structure.

The primary motivation for this seemingly counterintuitive approach was to optimize load times, particularly for players utilizing mechanical Hard Disk Drives (HDDs). HDDs, while cost-effective for mass storage, are inherently slower than Solid State Drives (SSDs) due to their moving parts. By duplicating frequently accessed assets, the developers theorized that the game could fetch these assets more quickly from different locations on the disk, circumventing the latency associated with seeking data on a single, fragmented HDD. They estimated that around 11% of their player base relied on HDDs, making this an optimization effort for a significant, albeit minority, segment.

However, as development progressed and performance metrics were analyzed more closely, a crucial insight emerged: the true bottleneck for load times in Helldivers 2 was not the loading of game assets but rather the complex, dynamic process of “level generation.” This realization fundamentally shifted their understanding of the game’s performance profile. The data duplication strategy, while intended to alleviate asset loading woes for HDD users, was providing minimal benefit in the face of the overarching level generation challenge. Furthermore, it imposed a significant cost on all players by ballooning the game’s installation size, making downloads longer, consuming valuable storage space, and potentially deterring new players with storage limitations.

The ‘Slim’ Revolution: Deduplication and Its Impact

Armed with this newfound understanding, the developers, in collaboration with their partners at Nixxes, embarked on a mission to radically optimize the game’s file size. Their solution centered on “completely de-duplicating our data.” Data deduplication is a sophisticated technique that identifies and eliminates redundant copies of data. Instead of storing multiple identical copies of a file or data block, a deduplication system stores only one unique instance and then replaces all other instances with pointers or references to that unique copy. This process can be incredibly effective in environments where much of the data is repetitive, a common scenario in large software projects like video games with shared assets across different levels or iterations.

The results of this effort were nothing short of spectacular: a reduction from approximately 154GB to 23GB, a saving of 131GB—nearly 85% of the original size. This massive reduction is a testament to the efficacy of aggressive data optimization. Crucially, the developers anticipate only a “minimal” impact on load times, estimating “seconds at most.” This minimal impact validates their hypothesis that level generation, not asset loading, was the primary factor in load times, and that the original data duplication was largely an unnecessary overhead.

The “slim” version is currently available as a technical public beta on Steam, allowing players to opt-in and test its stability and performance. This phased rollout demonstrates a cautious and methodical approach to software updates, ensuring that any unforeseen issues arising from such a drastic change can be identified and resolved before the optimized version becomes the default for all players.

Beyond Gaming: The Business Imperative of Data Efficiency

While the Helldivers 2 case study is rooted in the gaming industry, its underlying principles—data optimization, efficient resource management, and understanding performance bottlenecks—are universally applicable and hold profound implications for businesses across all sectors. In today’s data-driven economy, enterprises grapple with an ever-growing deluge of information, from customer databases and operational logs to cloud backups and software assets. The lessons learned from Helldivers 2‘s file size reduction offer a compelling blueprint for how businesses can enhance efficiency, reduce costs, and improve their overall digital posture.

1. Cost Savings in Storage and Bandwidth:

The most direct and tangible benefit for businesses mirrors the Helldivers 2 experience: reduced storage requirements. Whether data resides on-premise, in hybrid cloud environments, or entirely in the public cloud, storage incurs significant costs. Smaller file sizes mean less storage consumed, directly translating to lower monthly cloud bills (e.g., S3, Azure Blob Storage) or reduced capital expenditure on physical storage infrastructure. Moreover, distributing smaller software packages or data sets consumes less network bandwidth, leading to faster downloads, quicker deployments, and lower egress charges in cloud environments—a critical consideration for global operations.

2. Enhanced Software Development and Deployment:

For software development teams, the Helldivers 2 story highlights the importance of lean engineering practices. Large, bloated applications are harder to manage, update, and deploy. By adopting principles like data deduplication, efficient asset management, and rigorous performance profiling, businesses can:

- Accelerate CI/CD Pipelines: Smaller build artifacts and deployment packages mean faster integration, testing, and deployment cycles, enabling more agile development.

- Improve Developer Experience: Less time spent downloading dependencies or waiting for builds means developers can focus more on innovation.

- Reduce Technical Debt: Proactively identifying and eliminating redundant data and inefficient code pathways prevents the accumulation of technical debt that can hinder future scalability and maintenance.

3. Operational Optimization and Digital Transformation:

Modern businesses are constantly seeking ways to optimize operations and drive digital transformation. Efficient data management is a cornerstone of this effort. From enterprise resource planning (ERP) systems to customer relationship management (CRM) platforms, every piece of software and every data repository can benefit from optimization.

- Faster System Performance: While Helldivers 2 found level generation was the bottleneck, many enterprise applications do suffer from slow data retrieval or inefficient asset loading. Optimization ensures smoother, faster operations.

- Improved User Experience: Just as gamers benefit from quicker downloads and less storage use, business users benefit from faster application load times and more responsive systems, directly impacting productivity and satisfaction.

- Scalability and Agility: A lean digital footprint makes it easier for businesses to scale their infrastructure up or down as needed, responding dynamically to market changes or operational demands without being burdened by excessive data volume.

Expert Take 1: The Strategic Importance of Data Footprint

“In the enterprise world, every gigabyte counts. The ‘Helldivers 2’ optimization isn’t just a gaming anecdote; it’s a stark reminder that inefficient data storage and application bloat translate directly into higher operational costs, slower deployments, and a compromised user experience. Prioritizing a ‘slim’ digital footprint is no longer just a technical detail; it’s a strategic imperative for financial health and competitive agility.”

— Dr. Evelyn Reed, Chief Technology Officer, Nexus Innovations Group

Optimizing Your Digital Footprint: Key Strategies for Businesses

The principles behind Helldivers 2‘s success can be distilled into actionable strategies for businesses looking to optimize their own digital assets and software infrastructure.

| Strategy | Pros | Cons | Use Case Suitability |

|---|---|---|---|

| Data Deduplication | Significant storage savings, especially for repetitive data; reduces backup windows; improves data transfer efficiency. | Can be CPU-intensive during the deduplication process; requires specialized software or hardware; potential for performance impact if not carefully managed. | Large-scale data backups, virtual machine environments, archival storage, shared file systems, software asset repositories. |

| Data Compression | Reduces file sizes, saving storage and bandwidth; widely supported across various file types and systems. | Can be CPU-intensive during compression/decompression; lossy compression can reduce data quality; performance overhead. | Network transfers, general file storage, web assets (images, videos), long-term archives, database storage. |

| Asset/Code Refactoring | Eliminates redundant code and assets; improves maintainability and readability; reduces build times; enhances application performance. | Requires significant developer effort and time; potential for introducing bugs if not thoroughly tested. | Legacy applications, large software projects, microservices architectures, projects with evolving features. |

| Tiered Storage Management | Optimizes cost by moving less frequently accessed data to cheaper storage tiers; balances performance with cost. | Requires careful data classification and policy definition; introduces complexity in data retrieval. | Big data analytics, archival data, compliance data, infrequently accessed historical records. |

| Database Optimization | Improves query performance; reduces storage requirements; enhances application responsiveness. | Requires specialized database administration skills; ongoing monitoring and tuning are necessary. | Any application heavily reliant on databases (ERP, CRM, e-commerce, analytics platforms). |

Expert Take 2: The Role of AI in Future Optimization

“Looking ahead, AI and machine learning will revolutionize how we approach data optimization. From predictive analytics identifying data redundancy patterns to automated code refactoring tools and intelligent storage tiering, AI will enable businesses to maintain lean, efficient digital operations with minimal human intervention. This next wave of efficiency will unlock unprecedented value.”

— Sarah Chen, Lead AI Ethicist & Data Strategist, Quantum Leap Solutions

Cybersecurity and Agility: An Indirect Benefit

While the primary focus of file size reduction is often performance and cost, there are subtle but significant benefits for cybersecurity and operational agility. Smaller, more optimized software packages inherently pose fewer attack surfaces. Less redundant code means less potential for hidden vulnerabilities. Moreover, the ability to rapidly deploy smaller, streamlined updates means that security patches can be pushed out faster, reducing the window of exposure to newly discovered threats. In an age where every millisecond counts in the battle against cyber adversaries, an agile and efficient software development lifecycle, enabled by practices like those seen in Helldivers 2, is a powerful asset.

The Path Forward: A Call for Continuous Optimization

The story of Helldivers 2‘s radical file size reduction is more than just a win for gamers; it’s a powerful illustration of intelligent engineering and strategic optimization that holds profound lessons for the entire digital industry. It underscores the critical importance of understanding performance bottlenecks, challenging assumptions, and embracing innovative data management techniques.

For business professionals, entrepreneurs, and tech leaders, this case serves as a call to action:

- Audit Your Digital Footprint: Regularly assess your software applications, data storage, and cloud infrastructure for inefficiencies and redundancies.

- Prioritize Performance Profiling: Invest in tools and processes to accurately identify performance bottlenecks in your systems, rather than making assumptions.

- Embrace Lean Development: Encourage software development practices that prioritize efficiency, modularity, and continuous optimization from the outset.

- Leverage Modern Technologies: Explore the latest advancements in data compression, deduplication, and cloud-native optimization features offered by your providers.

In a rapidly evolving digital landscape, where data volumes continue to explode and resource efficiency is key to sustainability and competitiveness, the journey towards a ‘slim’ and optimized digital environment is not merely an option—it’s an essential strategy for long-term success. Just as Helldivers 2 has become significantly more accessible and efficient, businesses too can unlock substantial value by committing to continuous optimization and smart data management.

FAQ Section

Q1: How much space did Helldivers 2 save?

A1: Helldivers 2 saved a massive 131GB of space, reducing its PC installation size from approximately 154GB down to a lean 23GB.

Q2: Why was Helldivers 2 so large initially?

A2: The game’s initial large size was due to a deliberate “data duplication” strategy. Developers stored multiple copies of assets to try and optimize load times for players using mechanical Hard Disk Drives (HDDs). However, this proved largely ineffective as the main bottleneck for load times was actually “level generation.”

Q3: What is data deduplication?

A3: Data deduplication is a sophisticated technique that identifies and eliminates redundant copies of data. Instead of storing multiple identical copies, it stores only one unique instance and replaces all other instances with pointers or references to that unique copy, significantly reducing storage requirements.

Q4: How does this optimization benefit businesses?

A4: Businesses can benefit significantly through cost savings on storage and bandwidth, enhanced software development (faster CI/CD, better developer experience, reduced technical debt), and operational optimization (faster system performance, improved user experience, greater scalability and agility).

Q5: Will this “slim” version impact game performance?

A5: The developers anticipate only a “minimal” impact on load times, estimating “seconds at most.” This is because their analysis showed that level generation, not asset loading, was the primary factor in load times, making the original data duplication largely unnecessary overhead.

Conclusion

The remarkable file size reduction of Helldivers 2 stands as a powerful testament to the value of strategic optimization and efficient data management. This achievement, cutting the game’s footprint by 131GB, transcends the gaming world, offering profound lessons for businesses across all sectors. It underscores the critical importance of scrutinizing assumptions, precisely identifying performance bottlenecks, and embracing techniques like data deduplication. For enterprises grappling with ever-expanding data volumes and the escalating costs of digital infrastructure, the journey towards a lean, optimized digital footprint is no longer a luxury but a strategic imperative for financial health, operational agility, and sustained competitiveness. By committing to continuous optimization, businesses can unlock substantial value, enhance user experience, and secure their position in the rapidly evolving digital landscape.